Nvidia wants cars to think like humans and Mercedes is first to try

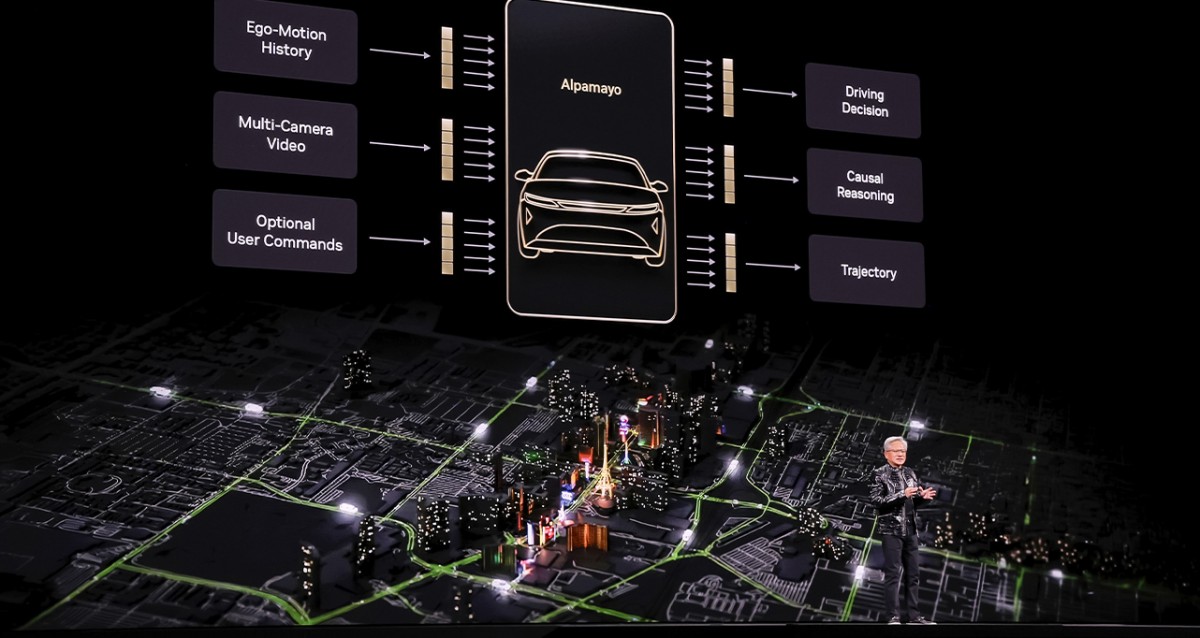

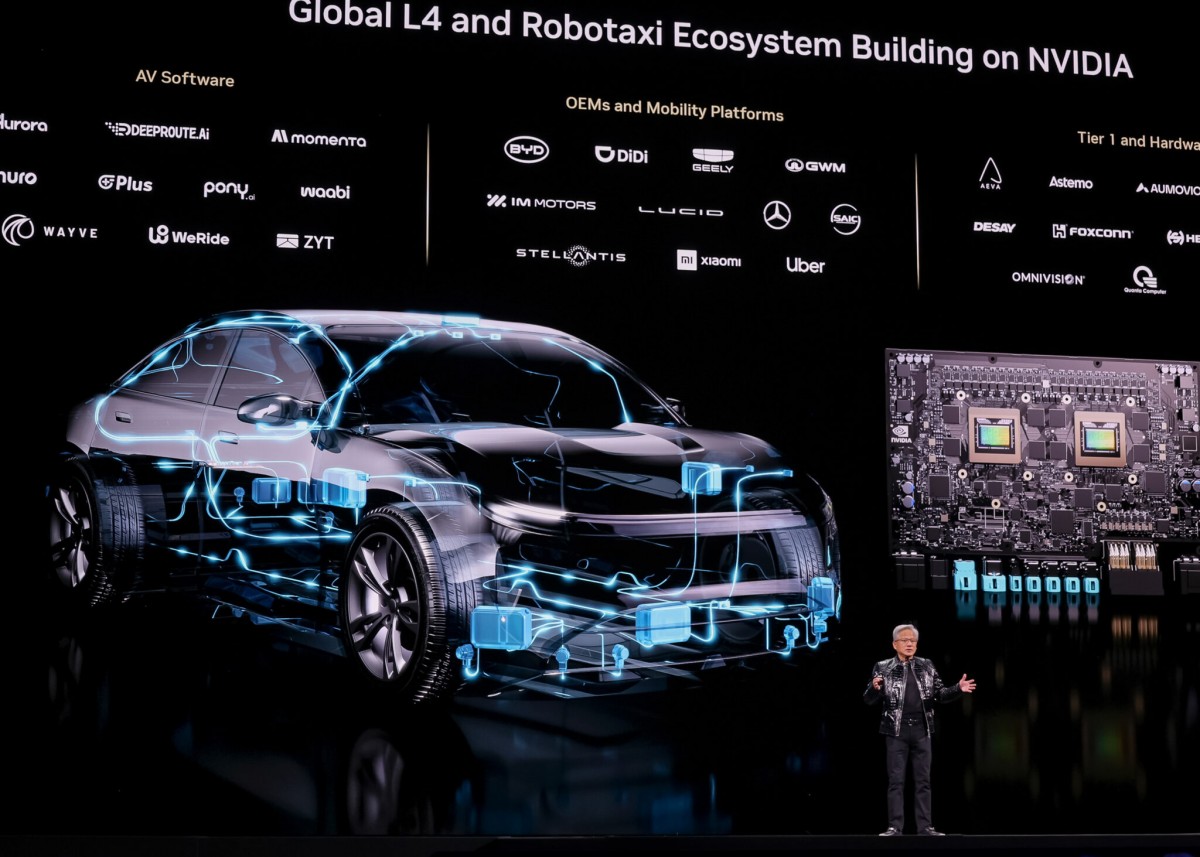

The Consumer Electronics Show (CES) in 2026 has already delivered plenty of shiny gadgets, but the biggest news comes from a company best known for computer chips. Nvidia took the stage today to change how we talk about self-driving vehicles. Automakers have always promised cars that could drive themselves, but Nvidia says it is time for cars to actually think. Jensen Huang, the head of Nvidia, showed off a new artificial intelligence system called Alpamayo.

The new system looks at the road, and it uses reasoning to understand what it sees. This is a big deal for the world of electric cars; Nvidia even calls this the "ChatGPT moment" for machines that move in the real world. Just like chatbots learned to write essays, this new software learns to drive by understanding logic. The company is not keeping this technology a secret either and is making it available to any car maker. The first people to experience this new "brain" will be drivers of the Mercedes-Benz CLA.

Source: Nvidia

Source: Nvidia

In the past, self-driving computers were good at spotting stop signs or other cars. But they followed strict rules and real driving can be messy. Sometimes, a construction worker waves you through a red light, or the sun reflects off a wet surface. These are so-called "edge cases," and they can confuse computers. Alpamayo is different. It uses a "chain-of-thought" process. It watches a video of the road, plans a path, and then explains why it chose that path. It can tell you, "I moved left because the truck ahead looked like it was parking."

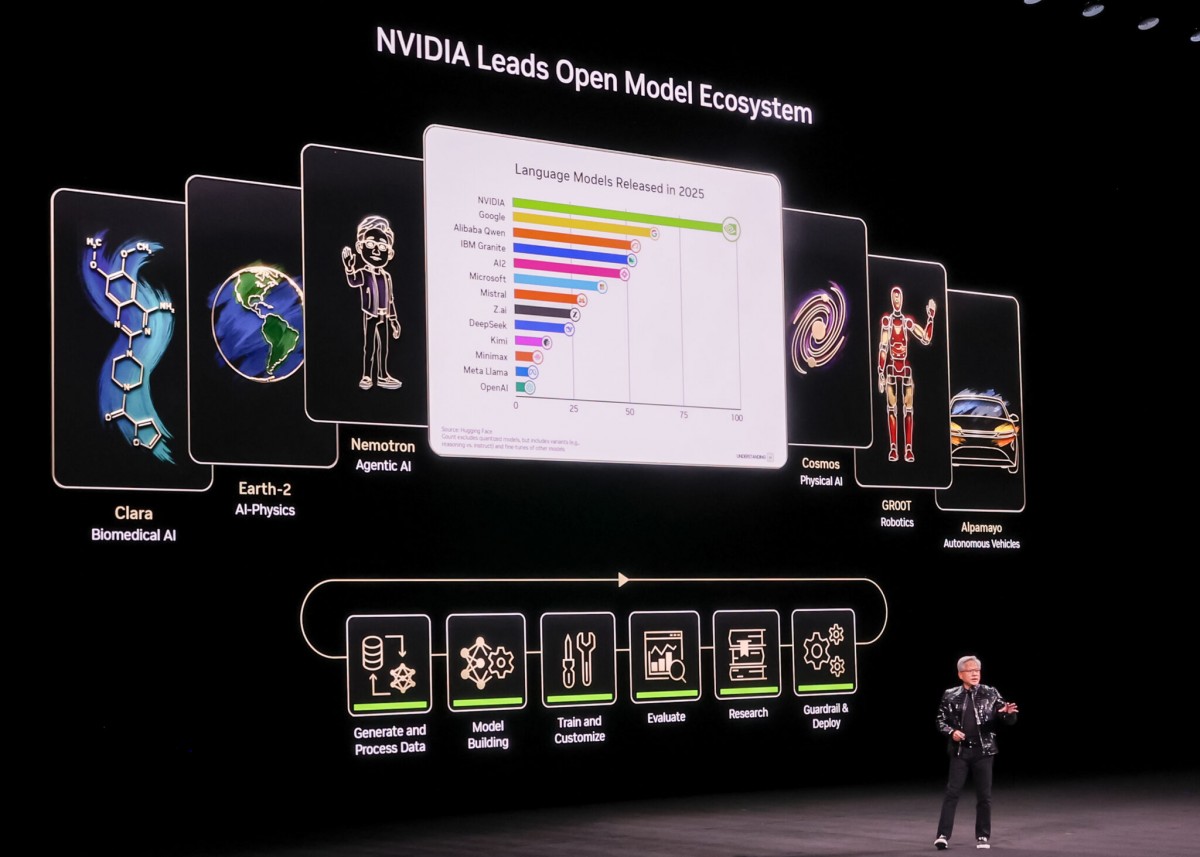

Jensen Huang explained that this ability to think through rare problems is what makes the system special. To help other companies catch up, Nvidia is releasing the "weights," or the core instructions of the model, to the public on a site called Hugging Face. They are also releasing a simulation tool called AlpaSim and a massive collection of driving data. This data includes over 1,700 hours of video showing complex driving situations. This makes Nvidia look a lot like Google and what it did with Android phones - they want to be the operating system for everyone.

We finally have a solid date for when this tech hits the road. The partnership with Mercedes-Benz has been in the news for a long time, but now it is real. The new Mercedes-Benz CLA will be the first production vehicle to use the full Nvidia setup. If you live in the United States, you can see these cars in dealerships in the first quarter of 2026. Europe will get the updated system in the second quarter of 2026. Drivers in Asia will have to wait until later in the year. This rollout puts the new electric cars from Mercedes at the front of the pack for tech lovers.

But what does this actually do for the driver? Mercedes calls the system MB.DRIVE ASSIST PRO. It merges navigation with driving help. You press a button, and the car helps you get from a parking lot to your final destination on city streets. It sounds like magic, but there is a catch. This is officially a "Level 2+" system - the car helps, but you are still the captain. You have to pay attention at all times. It is not a robot taxi yet. However, the system allows for "cooperative steering." This means if you grab the wheel to dodge a pothole, the system won't shut off. It works with you.

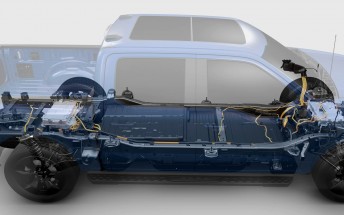

To make all this happen, the car needs eyes. The Mercedes-Benz CLA comes packed with 30 sensors - 10 cameras to see the world, 5 radar sensors to detect speed and distance, and 12 ultrasonic sensors for things close to the car. All this data gets processed by Nvidia's new Vera Rubin platform. This is a powerful six-chip computer system. It succeeds the older Blackwell model. While the heavy lifting happens in data centers, the "Rubin" and "Vera" chips are the muscles training the AI that ends up in the car.

This approach solves a big problem for safety regulators. Government officials often worry about AI because it is usually a "black box." That means nobody knows why the computer made a mistake. Since Alpamayo explains its reasoning, regulators can look at the "reasoning trace" to see the logic. This transparency is a smart move. It also helps startups and legacy car makers that cannot build their own self-driving teams. They can just buy Nvidia's chips and use the free software.

The race for better EVs and autonomous driving is heating up. Mercedes wants to deliver what Tesla has promised for years with its Full Self-Driving (FSD) package. Tesla's system is also a Level 2 driver assist, despite the fancy name. By using an open system that anyone can inspect, Mercedes and Nvidia might shake up the industry. When the Mercedes CLA ships in early 2026 with these features, the idea of a smart, reasoning car will stop being science fiction and start being a product you can actually buy.

Related

Reader comments

- Anonymous

The decline of civilizations, one chip at a time.

- 07 Jan 2026

- AfM

- Anonymous

free today, tariff tomorrow

- 07 Jan 2026

- 7$2

Looks interesting and promising but would never ever allow my car to drive on its own no matter what.

- 07 Jan 2026

- n$p